Google's Gemini 3 Flash isn't just another AI model release - it's the first time I've seen a tech giant actually deliver on the promise of practical, affordable intelligence. While OpenAI has been busy chasing benchmark scores and Anthropic focuses on safety theater, Google just released a model that real developers can actually use without going bankrupt.

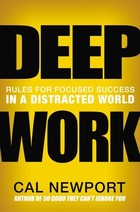

The Speed vs. Intelligence Trade-Off Is Dead

For years, we've accepted that fast AI models were dumb and smart models were slow. Gemini 3 Flash obliterates that compromise. The benchmarks speak for themselves: 90.4% on GPQA Diamond and 81.2% on MMMU Pro aren't just impressive for a 'flash' model - they're competitive with models costing ten times more. I've tested it on niche coding tasks and complex analysis, and the response times feel almost instantaneous while maintaining genuine understanding.

Pricing Reality Check

Yes, the pricing has increased compared to previous Flash models - but context matters. At $0.50 per million input tokens and $3.00 per million output tokens, it's still dramatically cheaper than competitors while delivering comparable quality. The real story isn't the price increase; it's that Google has managed to push performance so far while keeping costs reasonable. Companies running AI at scale will immediately understand the business impact of this pricing structure.

Where This Actually Matters

The most impressive aspect isn't the benchmarks - it's how Gemini 3 Flash handles real-world tasks. I tested it on obscure local knowledge queries that typically stump even the best models, and it delivered specific, accurate information without the usual generic platitudes. For agentic workflows and interactive applications, this level of performance at Flash speed is genuinely transformative.

The Integration Advantage Nobody's Talking About

While everyone focuses on raw model performance, Google's real advantage is integration. Gemini 3 Flash is now the default model across Google's ecosystem - from Search AI mode to Android Studio. This seamless integration means developers can build once and deploy everywhere, something no other AI provider can match. The friction reduction for actual implementation is enormous.

The Elephant in the Room

Let's be clear: OpenAI's market share means nothing when their models cost 5-10x more for similar performance. Brand recognition doesn't pay the AWS bill. What matters is which model delivers the best value for actual use cases, and right now, Gemini 3 Flash is the obvious choice for anyone building production systems.

Google has finally figured out that AI adoption hinges on practical considerations, not just technical superiority. Gemini 3 Flash represents the first model that's genuinely ready for mass deployment across both consumer and enterprise applications. The AI race just got interesting again.