Google CEO Sundar Pichai recently coined a phrase that has shareholders salivating and senior engineers reaching for the aspirin: "Vibe Coding."

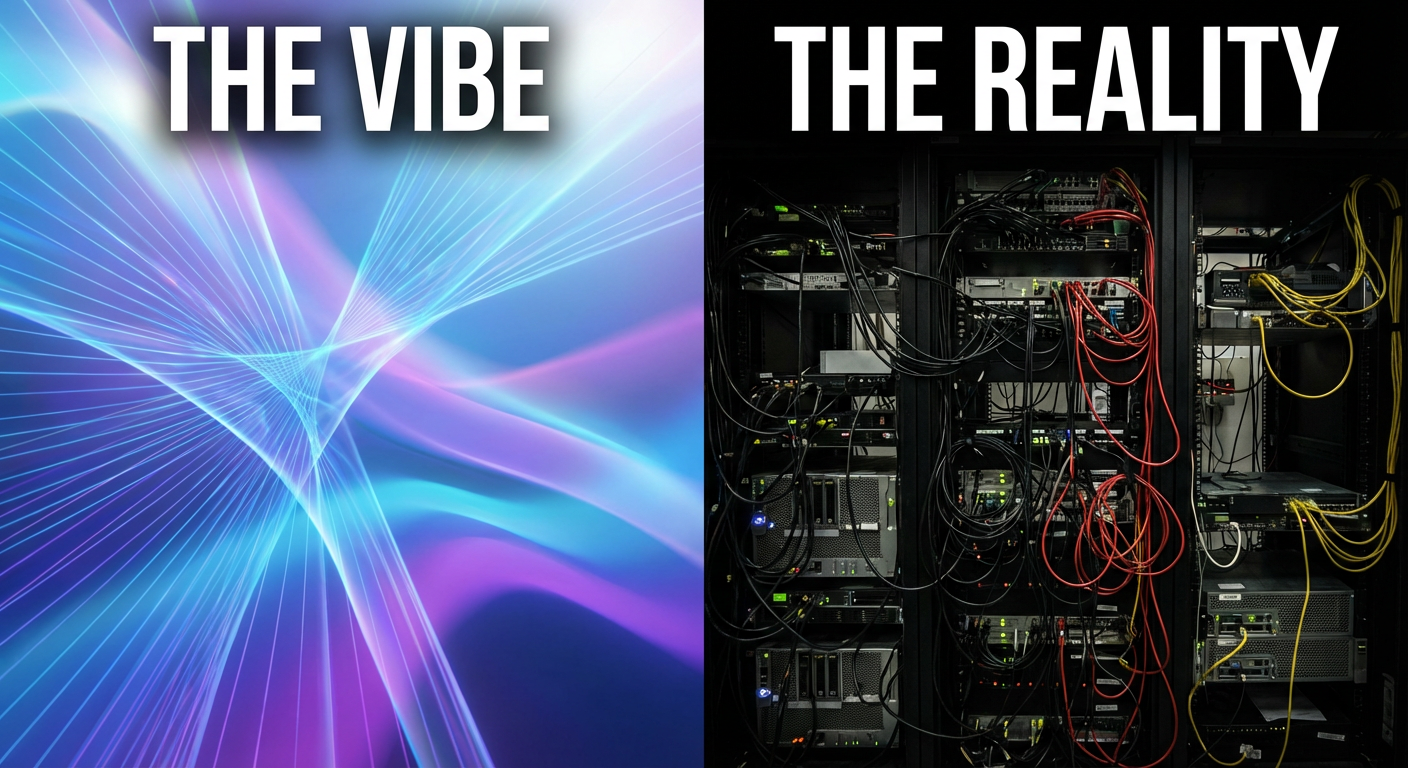

The premise is seductive. In Pichai’s vision, natural language is the new syntax. You don't need to understand memory management, recursion, or race conditions. You just need a "vibe." You describe what you want to an AI, and it manifests the software into existence. It is the ultimate democratization of computer science—a world where the barrier to entry drops from "years of study" to "can you type a sentence?"

But if you listen closely to the people who actually build software for a living, the reaction isn't excitement. It's deep, structural skepticism.

The "Selling Shovels" Syndrome

To understand why Big Tech is pushing this narrative so aggressively, you have to look at the balance sheet, not the GitHub repository.

We are witnessing a classic gold rush dynamic. The companies building the Large Language Models (LLMs)—Google, OpenAI, Microsoft—are selling the shovels at the entrance to the mine. It is in their existential interest to convince the world that there is gold in every hill and that anyone can dig for it.

If AI were truly capable of autonomously replacing engineering teams with "vibes," one would expect these very companies to be operating with skeleton crews. Instead, they employ thousands of the world's most expensive engineers to keep the lights on. When a CEO tells you that their product is magic, remember that they are an MBA selling a product, not an architect describing a blueprint.

The "Impact Driver" Fallacy

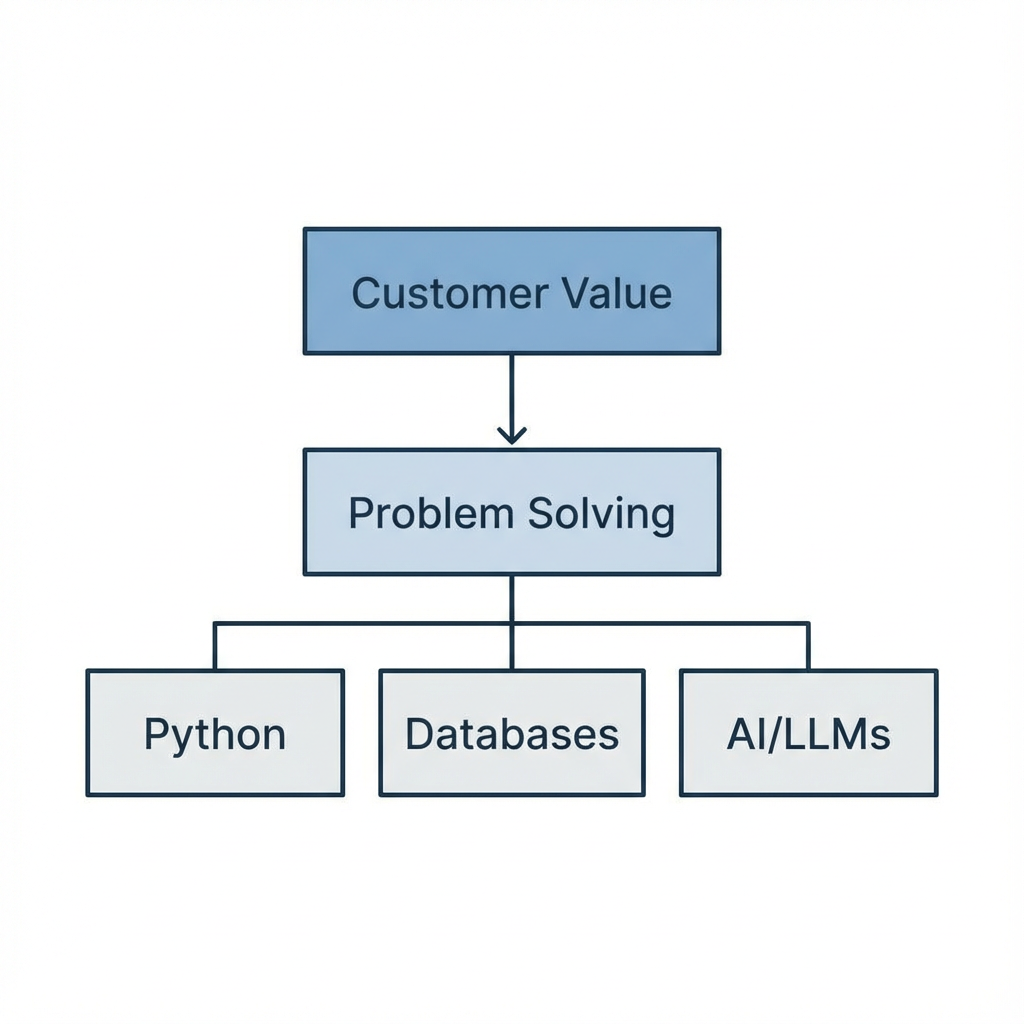

There is a growing trend of companies demanding an "AI First" approach, which is baffling to seasoned professionals. As one industry veteran put it, being "AI First" is like a carpenter saying they are "Impact Driver First."

AI is a tool. It is not the craftsman.

When you elevate the tool above the outcome, you lose sight of customer value. The goal of software engineering isn't to generate code; it's to solve problems. Sometimes AI solves those problems efficiently. Often, it introduces subtle, hallucinated bugs that take longer to fix than writing the code from scratch would have taken.

Where the Vibes Actually Work

This isn't to say the technology is useless. Far from it. The consensus among pragmatic developers is that AI is incredible for "Throwaway Code."

Need a Python script to ping every device in your lab, check a specific API endpoint, and log the results to a spreadsheet? AI can write that in 45 seconds. It would take a human 20 minutes of reading documentation.

This is the sweet spot: low-risk, standalone scripts that don't need to be maintained, scaled, or integrated into a massive legacy monolith. For prototyping, boilerplate, and "quick and dirty" utilities, the vibe is genuinely good.

The Technical Debt Trap

The danger arises when "vibe coding" is applied to enterprise architecture.

"Coding" has been accessible since the 1980s; the hard part has always been engineering. Engineering is about maintainability, security, and system design.

If an AI generates 500 lines of code in two minutes, you haven't saved time; you've created a liability. You cannot validate a solution you don't understand. If a junior developer copies code from Stack Overflow without understanding it, it's bad practice. If a "vibe coder" generates an entire application without understanding the underlying logic, it's a ticking time bomb.

When the system breaks—and it always breaks—the "vibe" won't tell you why the database is deadlocking or why the authentication token is leaking. Only a human with a mental model of the system can do that.

Conclusion: The Bubble and the Bloodbath

We are likely in a hype bubble that is dangerously close to popping. Leaders are pushing AI to drive stock prices, often creating organizational chaos by devaluing human expertise. They are generating vast amounts of text and code that no one reads and no one verifies, creating an ouroboros of digital waste.

Eventually, the dust will settle. AI will remain a powerful assistive tool—a super-powered autocomplete that helps skilled professionals work faster. But the idea that we can replace engineering culture with "vibes" is a fantasy that will likely end in a lot of broken production environments.

Until the AI can wake up at 3:00 AM to fix a server outage it caused, keep your engineers happy. You're going to need them.