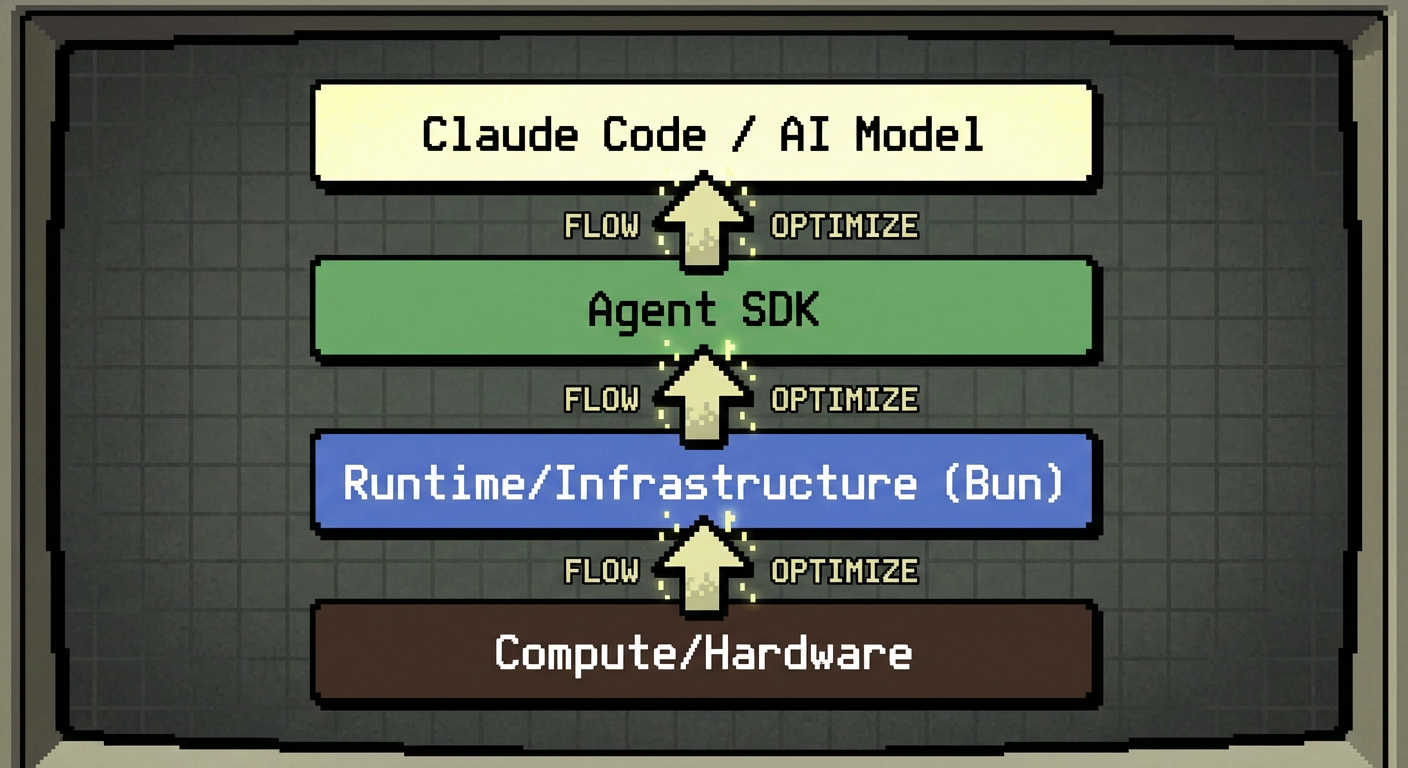

The tech world recently witnessed an acquisition that, at first glance, feels like a category error. Anthropic, a leading AI research lab and creator of Claude, acquired Bun, the high-performance JavaScript runtime, bundler, and package manager.

On the surface, an AI model company buying a Node.js competitor seems akin to a car manufacturer buying a specific brand of wrench. However, when you peel back the layers of how AI agents are evolving, this move signals a pivotal shift in the industry: the vertical integration of the AI coding stack.

Securing the Execution Layer

The immediate logic is defensive. Claude Code, Anthropic’s agentic coding tool, ships as a Bun executable. It relies heavily on Bun’s ability to bundle dependencies, interpret TypeScript natively, and start up instantly. As the official announcement noted, if Bun breaks, Claude Code breaks. By bringing the runtime in-house, Anthropic secures the stability of its product delivery vehicle.

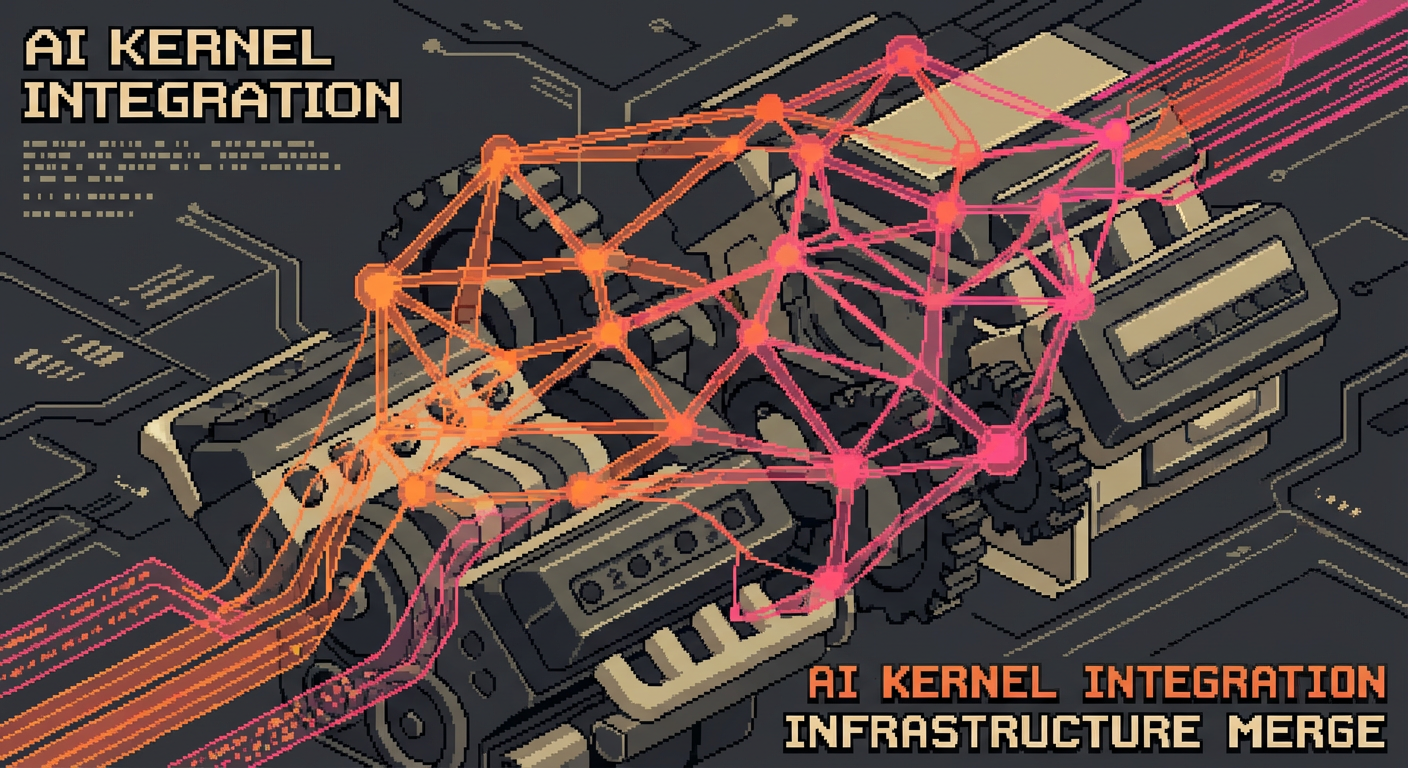

But there is a deeper, offensive strategy here. We are moving from a paradigm where humans write code to one where AI agents generate and execute it. For an agent like Claude to function effectively in enterprise environments, it needs a runtime that is not just a passive interpreter, but a cloud-native toolkit.

Bun has been quietly evolving beyond a simple Node.js replacement. It has integrated S3 APIs, SQL support, and streaming capabilities directly into the runtime. This trajectory aligns perfectly with an AI agent that needs to traverse cloud services, manipulate databases, and manage file systems without the friction of setting up complex external dependencies. Anthropic isn't just buying a runtime; they are buying a frictionless operating environment for their agents.

The Speed Demon and the Voxel Game

The origin story of Bun is a testament to the power of developer frustration. Founder Jarred Sumner wasn't trying to revolutionize the JavaScript ecosystem; he was trying to build a voxel game in the browser. Faced with a 45-second iteration cycle waiting for Next.js to hot reload, he did what any obsessed engineer would do: he stopped building the game and started rebuilding the tools.

Sumner ported the transpiler logic to Zig, a low-level systems programming language known for its performance and safety features (though not without its own steep learning curve). The result was a runtime that utilized JavaScriptCore (the engine powering Safari) rather than V8 (the engine powering Chrome and Node.js).

This architectural choice resulted in significantly faster startup times—up to 4x faster in some benchmarks. For long-running servers, startup time is negligible. But for CLI tools and ephemeral AI agents that spin up, execute a task, and spin down, those milliseconds compound into a massive user experience advantage.

Why JavaScript? Why Not Rust?

A common critique arises when discussing high-performance AI tooling: Why double down on JavaScript? Why not build the agent infrastructure in Rust, Go, or C#?

The answer lies in ubiquity. JavaScript (and TypeScript) is the lingua franca of the modern web. If the goal of Claude Code is to empower developers where they already live, it must speak their language. Furthermore, Bun’s "batteries-included" approach—bundling the test runner, package manager, and transpiler into a single binary—removes the configuration hell that plagues the JS ecosystem.

For an AI agent, context switching is costly. An all-in-one runtime allows the AI to write tests, install packages, and execute code within a single, coherent mental model. It simplifies the prompt engineering required to drive the agent, as the tooling creates a predictable, standardized environment.

The Open Source Exit Paradox

This acquisition also highlights a fascinating dynamic in the open-source business model. Bun raised substantial venture capital ($26 million total) and had years of runway left. They didn't need to sell.

Usually, a VC-backed runtime faces a difficult existential crisis: how to monetize without alienating the community. This often leads to awkward pivots into hosting services or enterprise support contracts that distract from the core technology. By joining Anthropic, Bun effectively skips this "monetization anxiety" phase.

However, this creates a cultural paradox. Bun is written in Zig, a language with a community that has taken a staunchly anti-AI stance in its code of conduct. Seeing their flagship success story absorbed by a leading AI lab may cause friction in the contributor ecosystem.

Conclusion

Anthropic’s acquisition of Bun is a signal that code generation is no longer just about the model—it’s about the environment the model lives in. As AI writes more of our software (with predictions of AI writing 90% of code within months), the distinction between the writer (the AI) and the pen (the runtime) is blurring.

Bun provides Claude with a hyper-fast, integrated body to match its brain. For developers, this likely means better tools that remain free, subsidized by the massive capital flowing into AI. The era of the AI-optimized runtime has arrived.