For a decade, December has meant one thing for a specific subset of the developer population: waking up at midnight EST to solve algorithmic puzzles before the coffee has even finished brewing. However, the 2025 edition of Advent of Code (AoC) marks a significant departure from tradition. With a reduced schedule and the elimination of the global leaderboard, the event is signaling a major cultural shift—one that prioritizes human learning over machine-assisted speed.

The AI Elephant in the Room

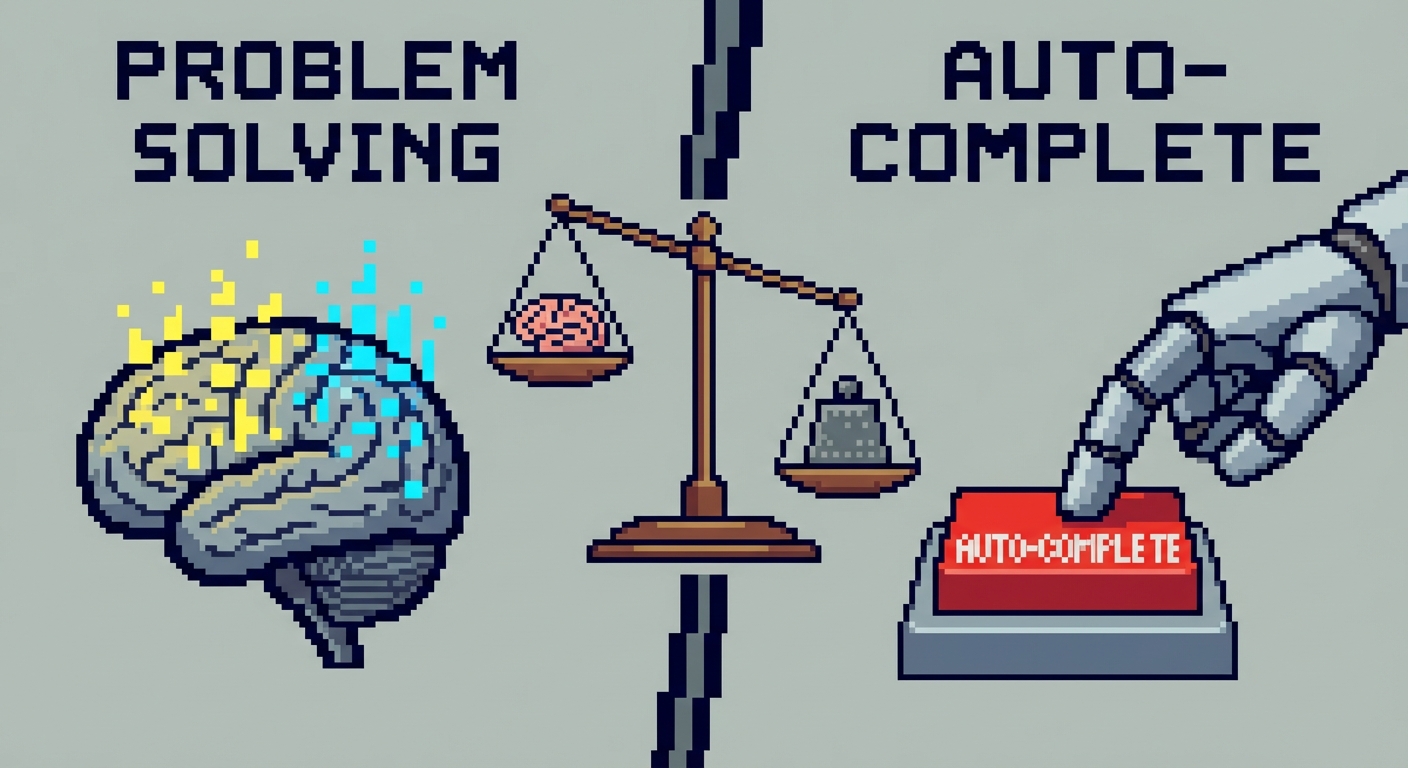

The most striking change this year is the removal of the global leaderboard. For years, the leaderboard was the domain of competitive programmers and speed-coders. However, the rise of Large Language Models (LLMs) has fundamentally broken this mechanic. In recent competitions, the saturation of AI-generated solutions rendered the concept of a human "race" obsolete.

Removing the leaderboard is a tacit admission that we cannot compete with AI on speed, nor should we try. Using an AI to solve these puzzles is akin to sending a friend to the gym on your behalf; the numbers might go up, but you aren't getting any stronger. By de-emphasizing the race, AoC 2025 reclaims the event as a "gym for the brain." It forces participants to confront the logic themselves rather than mastering the art of prompt engineering. This shift returns the focus to the joy of the puzzle rather than the dopamine hit of a rank.

12 Days of Code: Quality Over Quantity

This year's event has been shortened to 12 puzzles, ending halfway through the month rather than spanning the full 24 days leading up to Christmas. While some purists might argue this dilutes the "Advent" concept, it addresses a very real issue: burnout.

Maintaining a daily streak amidst holiday preparations, end-of-year work crunches, and family obligations often turned a fun challenge into a chore. The reduced volume allows participants to savor the problems. Instead of rushing a solution in Python just to get it done, developers might now have the breathing room to attempt the puzzles in a language they are learning, like Rust, Zig, or Haskell. It transforms the event from a sprint into a series of deliberate practice sessions.

The Technical Playground

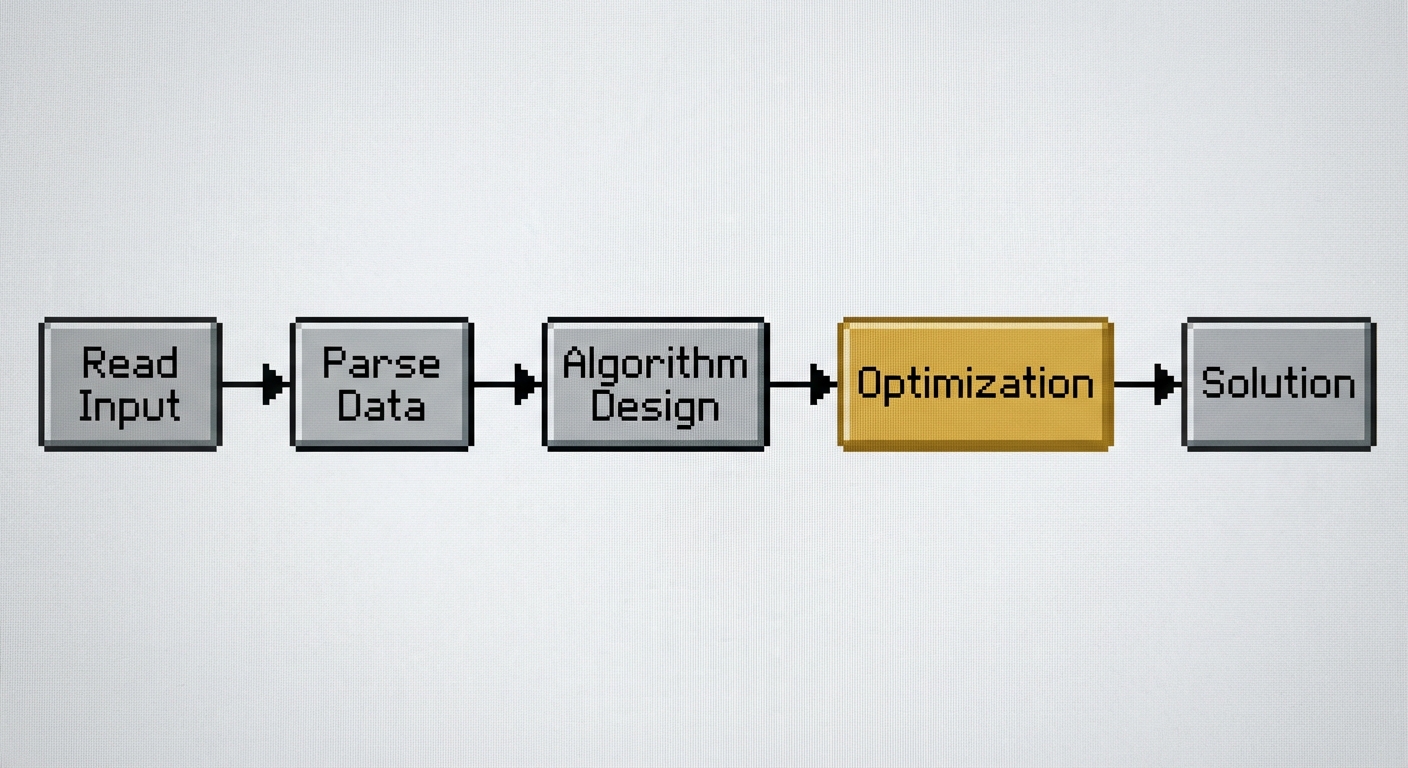

Despite the changes, the core technical philosophy remains accessible. The puzzles are designed to be solvable on decade-old hardware within 15 seconds, emphasizing algorithmic efficiency over raw compute power. This constraint is a beautiful reminder that good code isn't just about getting the right answer; it's about getting the right answer efficiently.

However, participants should remain vigilant regarding technical quirks. The authentication system, which relies on OAuth, links puzzle inputs to specific user IDs. There have been reports of "dataset drift" where users debugging across different sessions or devices might accidentally test against the wrong input data. The golden rule remains: if your logic seems sound but the answer is wrong, verify your input file hasn't silently shifted. It is a subtle reminder that in software engineering, the environment is often as much a source of bugs as the code itself.

Why We Still Code for Fun

In an era where junior developer tasks are increasingly automated, is there still value in solving toy problems about elves and sleighs? The answer is a resounding yes.

Advent of Code allows developers to sharpen tools they rarely use in their day jobs. Most enterprise work involves gluing APIs together; AoC requires implementing depth-first searches, parsing grammars, and optimizing coordinate systems. It is an opportunity to break free from the IDE's autocomplete and reconnect with the fundamentals of computer science.

While the "gamification" aspect has been scaled back, the community spirit remains via private leaderboards. Companies and friend groups are still using the event for friendly rivalry, often with charitable stakes. This local-first approach to competition fosters better connection than a global list dominated by bots.

Conclusion

The 2025 iteration of Advent of Code is perhaps the most mature version of the event yet. By stepping away from the AI-fueled arms race and respecting the participants' time, Eric Wastl has ensured the longevity of the project. It is no longer about who is the fastest; it is about who is willing to sit with a problem, struggle with it, and learn something new in the process.